A user agent is a string of text that a web browser or other client software sends to a web server along with each request to identify itself and provide information about its capabilities.

The user agent string can contain information such as the browser name and version, the operating system, and other details.

Site owners can block user agents known to be associated with malicious activity, such as bots and scrapers using with the help of CloudFlare Firewall Rules

In this post, you will learn all you need to know about blocking user agents for security purposes.

What User Agents to Block?

Some examples of user agents that may be associated with malicious activity and should be blocked for security purposes include:

| python | Go-http-client |

| curl | github |

| Apache | Scrapy |

| ruby | wp_is_mobile |

| okhttp | colly |

Where to get a List of User Agents to Block?

I know I suggested blocking eight user agents, but I don’t really believe in copying someone else’s massive list of “malicious” user agents and turning it into a firewall rule.

A lot of automated bot traffic uses very specific user agents that may be causing problems for other websites and will likely never come anywhere near yours.

A better approach is to review your own firewall logs and identify the user agents that are actually attempting to target your site.

Blocking SEO Tools and the Other Guys

Blocking certain types of scrapers or SEO tools can be done by blocking their user agent.

You can block non-malicious bots like Ahrefs, Moz or Search Engines like Yandex.

The good thing about blocking these types of services is that these types of bots identify themselves as bots.

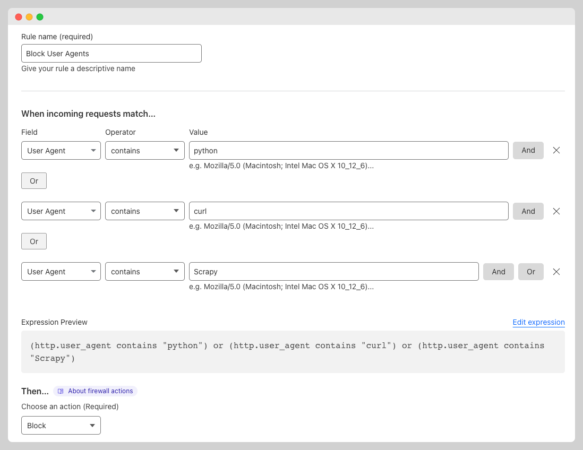

How to Block User Agents with Cloudflare Firewall Rules

Blocking user agents with Cloudflare is a simple process that can help protect your website from unwanted traffic or bots.

Here’s how to do it:

- Log into your Cloudflare account and select the website that you want to block user agents for.

- Click on the “Security” tab, then click on the “WAF” button.

- Click on the “create firewall rule” button

- Name the rule

- Choose “user agent” from the field drop-down menu

- Choose”contains” from the field drop-down menu

- Add a keyword in the “value” field

- use “or” to target other user agents

- Choose “block“

- Click the “Deploy Firewall Rule” button

As you can see, the process is really simple.

You can update remove or add more keyword as you consider necessary

Challenge Bad Bots

A Managed Challenge automatically decides the best challenge type based on the visitor’s behavior and reputation.

Legitimate users usually pass without noticing, while bots get slowed down or blocked.

So if you don’t feel like blocking is the best alternative, maybe give “managed challenges” a try.

Challenge Bots with Empty User Agent

An empty User-Agent is a common signal of non-browser traffic and is often associated with bots, scripts, or poorly configured crawlers.

This is how you tackle those bad bots with a Cloudflare Firewall Rule

(http.user_agent eq "")

After enabling the rule, review events / firewall logs and only escalate to Block only if the traffic is clearly malicious

Blocking AI Bots

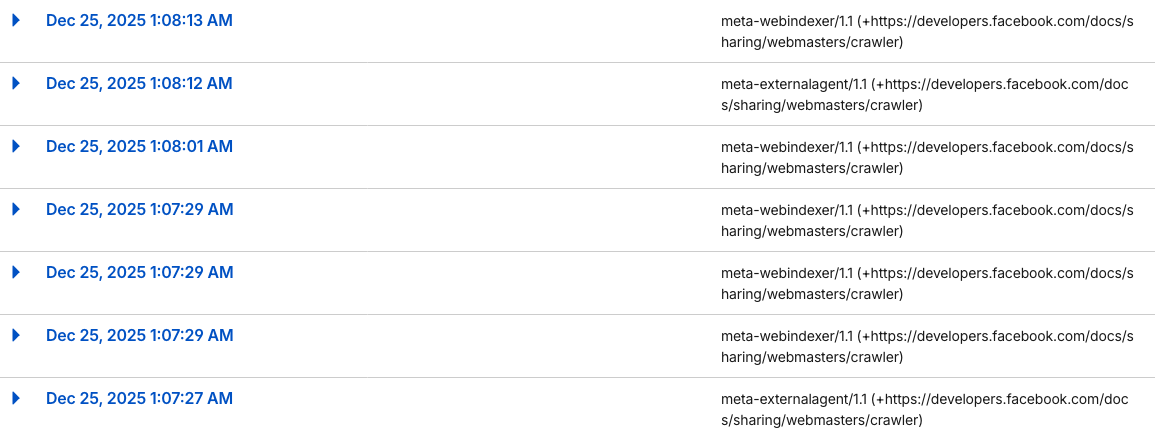

I hadn’t really thought about blocking AI bots before, mainly because my site is 100% static, so server resources haven’t been a big concern for me.

That said, it might be worth taking a closer look at what Meta’s indexer bots have been doing lately.

They’ve been generating a noticeable amount of crawl traffic, and it’s more than I’d expect.

For reference, this is the Meta user agent I’m seeing:

meta-webindexer/1.1 (+https://developers.facebook.com/docs/sharing/webmasters/crawler)Even on a static site, that extra activity can still inflate page views and muddy your metrics. What’s more, it doesn’t seem to result in any real traffic or added visibility. From what I can tell, the bots are mostly scanning content for analysis or AI training, without providing any clear upside.

Here’s the proof of what I’m seeing.

It’s also probably a good idea to keep an eye on other AI-related bots as well.

Blocking User Agents is not the Ultimate Security Measure

Blocking certain user agents can improve the security of a WordPress site by preventing certain types of bots or automated scripts from accessing the site.

It is also important to note that blocking user agents is not a complete solution to securing a WordPress site.

User agents can be easily faked by malicious actors in order to bypass security measures that are in place to block certain types of user agents.

This can be done by modifying the user agent string that is sent with HTTP requests to make it appear as if the request is coming from a different type of device or browser.

This is an example of a user agent a bad bot could use:

Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:102.0) Gecko/20100101 Firefox/102.0For example, a hacker could change their user agent to that of a search engine crawler in order to gain access to parts of a website that are otherwise blocked to regular users.

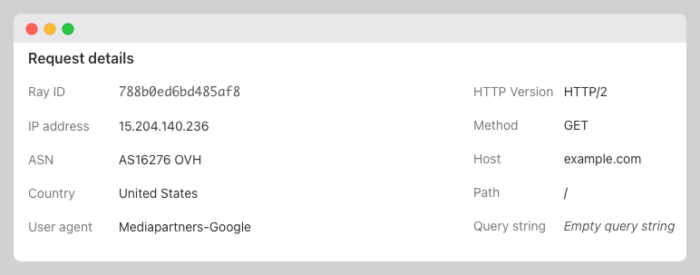

is Google using OVH servers now?

I don’t think so.

When it comes to user agents, you can be any big organization you want.